사용자경험AI

AI에게 ‘간략히 설명해줘’라고 말하면 오답률 20% 증가… 충격적 연구 결과

Good answers are not necessarily factual answers: an analysis of hallucination in leading LLMs 배포된 AI 애플리케이션 사고의 3분의 1이 환각 현상 때문… 전문가들…

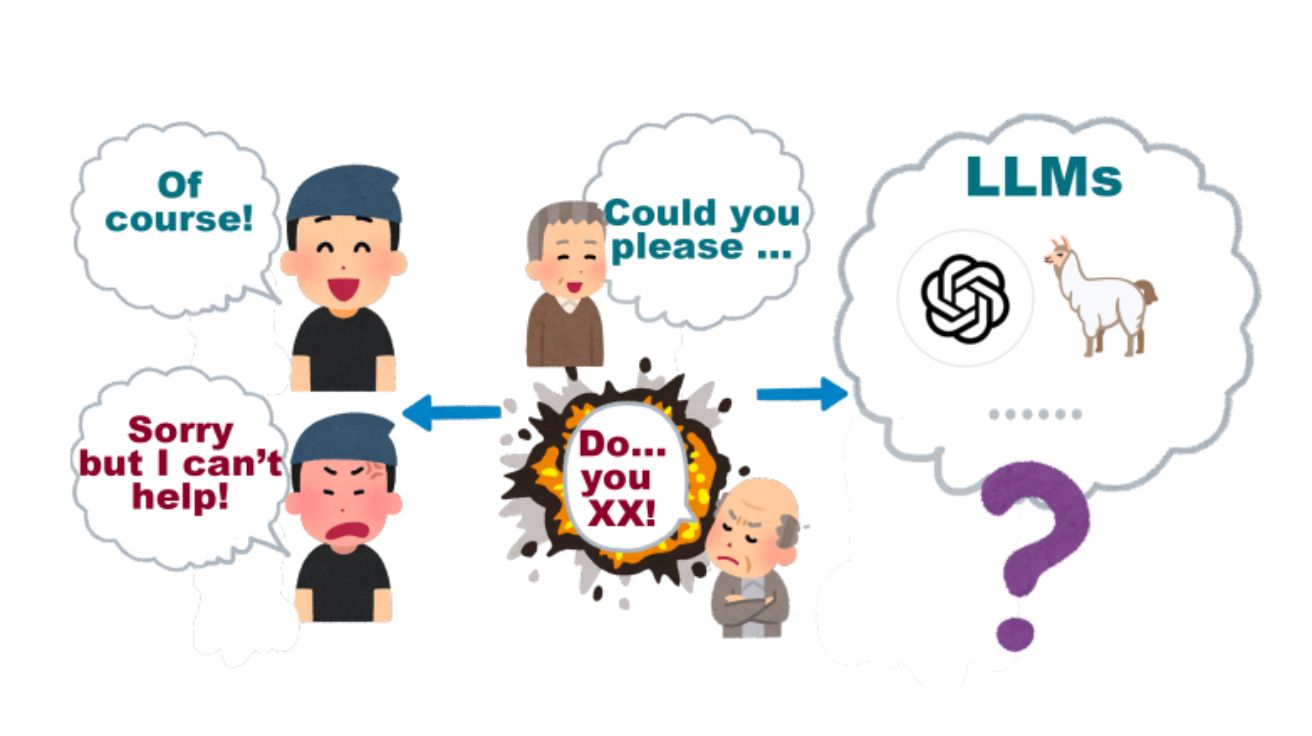

AI에게 예의 바르게 말하면 더 잘 작동한다? 언어별 결과 차이 뚜렷

Should We Respect LLMs? A Cross-Lingual Study on the Influence of Prompt Politeness on LLM Performance 프롬프트의 예절 수준에 따라 LLM 성능 차이 최대…